For every progression, there is an equal or opposite regression.

AI is a topic you can’t get away from hearing about, and for good reason. From the fear-mongering due to sci-fi movies to the realism of deepfakes, it is something we do need to talk about. And not just talk about it but work on finding viable and ethical solutions to let it continue to advance without violating privacy and autonomy. It has been quite polarizing, with those who highly support it for its ease of access and automation, with others condemning its lack of ethical regulation.

Probably the biggest use of AI, commercially, has been for art. Models like DALL-E or Midjourney create anything from fantasy landscapes of modern people lounging with dragons to making a 1:1 recreation of the Mona Lisa. The biggest pushback for these models came from artists who, while making their creations public, did not consent to have their creations’ data mined for AI training models. Oftentimes, I see people having an AI model take art specifically from a certain artist and then having it create a commission, rather than paying the artist themselves to make it.

The Deepfake situation alone has escalated to the point that it has gotten to the desks of White House representatives. A big push was this was the recent Taylor Swift situation in which a user was using AI to scrap images of her from around the internet and create nude images of her, that she never took and without her consent. Imagine, if this can happen to a realistic scale with a celebrity, what that could impact on a social and political level, especially in terms of image, trust, and information exchange.

Even more so, at the beginining of 2024, when a video was release of a fake robocall from President Joe Biden urging the voters of New Hampshire not to vote.

Hear fake Biden robocall urging voters not to vote in New Hampshire

As AI evolves and learns, these types of situations become more and more of a concern.

As of today, 11 states are working towards legislation towards AI regulation. Many of the states are leaning towards more of a “if a person is depicted by AI, then that person has the right to sue the creator” type of stance. Not a direct action towards fixing the problem, but may be a deterrent after use.

So what we, the day-to-day users, do to combat this?

The NightShade Team

At the University of Chicago, a group was assembled by Ben Zhao. A group that had noticed that there was a problem with many generative AI models. The data that they use to train are being stripped from human artists, without consent (often through an opt-out model) and with very little to no enforcement/regulation.

Thus Glaze and Nightshade were born.

Glaze was the first creation. A Defensive tool to help protect digital media from AI model training. A sort of “visual” forcefield that can be added to a piece of art that will make it look unrecognizable to an AI model, but normal to the human eye. Think of it like 3D glasses. With them off, things look normal, put them on and the image looks much different even though the actual movie doesn’t change. This allows for the art to still be shared openly online but is hidden from the AI’s scraper as it doesn’t understand what it’s looking at.

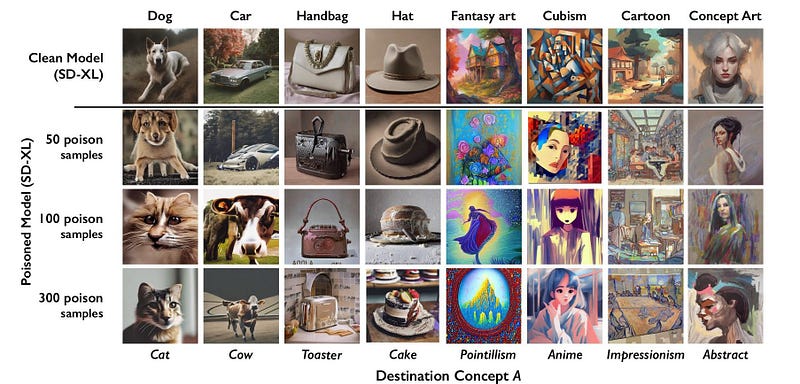

Nightshade, however, is the real heavy hitter as this tool was built for Attack. Whereas Glaze masks the image, Nightshade scrambles the pixels of an image (through the eyes of the AI) so that a picture does not look like what it is. In doing so, the AI receives incorrect data and begins to become mentally poisoned, learning to associate things incorrectly. As it continues to take in more information (models can often contain billions of data points), it becomes more and more confident in the WRONG association. Not only that, but it will also warp its images of related things/topics. See the image below of examples from researchers. Top to bottom, the image changes drastically as it interacts with more poisoned data.

Example from the Researchers

Think looking at the Mona Lisa but your mind is saying your looking at The Scream. You begin to assosciate “smile” (as well related things like ‘grin’, ‘happy’, ‘laugh’) to “scream”. Then the next time someone prompts for anything with a smile, say a “happy family at holidays”, they will get a nightmarish image of screams and horror.

The concern that many have is what you would expect from something considered as ‘poison’ and self-labeled as an attack — that it is a virus and is promoting cyber attacks. That it is meant for harm and as a way to undermine AI companies.

Harm, No.

Undermine, Yes.

“Nightshade’s goal is not to break models, but to increase the cost of training on unlicensed data, such that licensing images from their creators becomes a viable alternative.” — Nightshade Team Website

The difference between this and your traditional cyber attack is not only scope but also intent. This program is not meant to deceive users and, but to force the companies that purposefully ignore and mishandle user data. If boundaries are set in place to protect the user (opt-out, privacy policies, etc) it will charge the infringers per violation of media, thus discouraging them from malicious practices.

This is only a temporary solution however as, with all attack-like programs, you usually get another 2 players to the game, neither of which is the company/AI nor user/artist.

- The Black Hat — those that would want to take this tool to the next level to get deep into the pipelines of an AI’s neural network, cause irrevocable harm and spread it to other AI and non-AI services.

- The White Hat — Those that are there to protect the AI. They would build defenses against Nightshade and/or create countermeasures for it.

As with all technological changes, it is a game of tug of war. As one side becomes more advanced, it pushes the other side to grow in turn.

While tools like Nightshade and Glaze are new in the area of AI Combatants, they will not be the last.

“As with any security attack or defense, Nightshade is unlikely to stay future-proof over long periods. But as an attack, Nightshade can easily evolve to continue to keep pace with any potential countermeasures/defenses.” —The Nightshade Team Website

We can only hope that AI Combating tools like these will continue to be created and used to force Mandatory Ethical AI Usage.

Check out Nightshade and Glaze!

Glaze - Protecting Artists from Generative AI

Edit descriptionglaze.cs.uchicago.edu